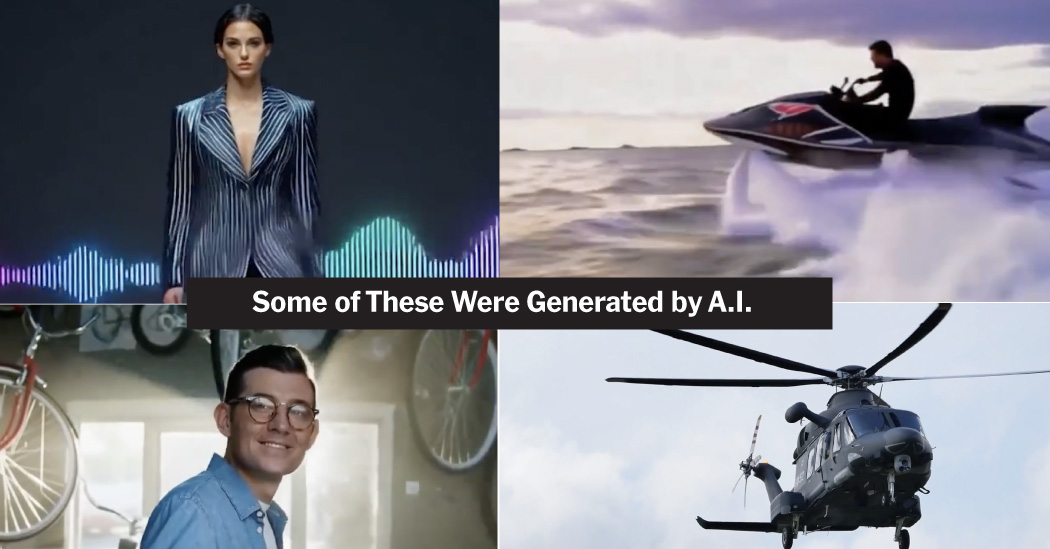

Tools powered by artificial intelligence can create lifelike images of people who do not exist.

See if you can identify which of these images are real people and which are A.I.-generated.

Was this made by A.I.?

1/10

How did you do?

Were you surprised by your results? You guessed 0 times and got

0 correct.

Ever since the public release of tools like Dall-E and Midjourney in the past couple of years, the A.I.-generated images they’ve produced have stoked confusion about breaking news, fashion trends and Taylor Swift.

Distinguishing between a real versus an A.I.-generated face has proved especially confounding.

Research published across multiple studies found that faces of white people created by A.I. systems were perceived as more realistic than genuine photographs of white people, a phenomenon called hyper-realism.

Researchers believe A.I. tools excel at producing hyper-realistic faces because they were trained on tens of thousands of images of real people. Those training datasets contained images of mostly white people, resulting in hyper-realistic white faces. (The over-reliance on images of white people to train A.I. is a known problem in the tech industry.)

The confusion among participants was less apparent among nonwhite faces, researchers found.

Participants were also asked to indicate how sure they were in their selections, andt researchers found that higher confidence correlated with a higher chance of being wrong.

“We were very surprised to see the level of over-confidence that was coming through,” said Dr. Amy Dawel, an associate professor at Australian National University, who was an author on two of the studies.

“It points to the thinking styles that make us more vulnerable on the internet and more vulnerable to misinformation,” she added.

Top photos identified as “real”

Top photos identified as “A.I.”

The idea that A.I.-generated faces could be deemed more authentic than actual people startled experts like Dr. Dawel, who fear that digital fakes could help the spread of false and misleading messages online.

A.I. systems had been capable of producing photorealistic faces for years, though there were typically telltale signs that the images were not real. A.I. systems struggled to create ears that looked like mirror images of each other, for example, or eyes that looked in the same direction.

But as the systems have advanced, the tools have become better at creating faces.

The hyper-realistic faces used in the studies tended to be less distinctive, researchers said, and hewed so closely to average proportions that they failed to trigger suspicion among the participants. And when participants looked at real pictures of people, they seemed to fixate on features that drifted from average proportions — such as a misshapen ear or larger-than-average nose — considering them a sign of A.I. involvement.

The images in the study came from StyleGAN2, an image model trained on a public repository of photographs containing 69 percent white faces.

Study participants said they relied on a few features to make their decisions, including how proportional the faces were, the appearance of skin, wrinkles, and facial features like eyes.