A bipartisan group of senators released a long-awaited legislative plan for artificial intelligence on Wednesday, calling for billions in funding to propel American leadership in the technology while offering few details on regulations to address its risks.

In a 20-page document titled “Driving U.S. Innovation in Artificial Intelligence,” the Senate leader, Chuck Schumer, and three colleagues called for spending $32 billion annually by 2026 for government and private-sector research and development of the technology.

The lawmakers recommended creating a federal data privacy law and said they supported legislation, planned for introduction on Wednesday, that would prevent the use of realistic misleading technology known as deepfakes in election campaigns. But they said congressional committees and agencies should come up with regulations on A.I., including protections against health and financial discrimination, the elimination of jobs, and copyright violations caused by the technology.

“It’s very hard to do regulations because A.I. is changing too quickly,” Mr. Schumer, a New York Democrat, said in an interview. “We didn’t want to rush this.”

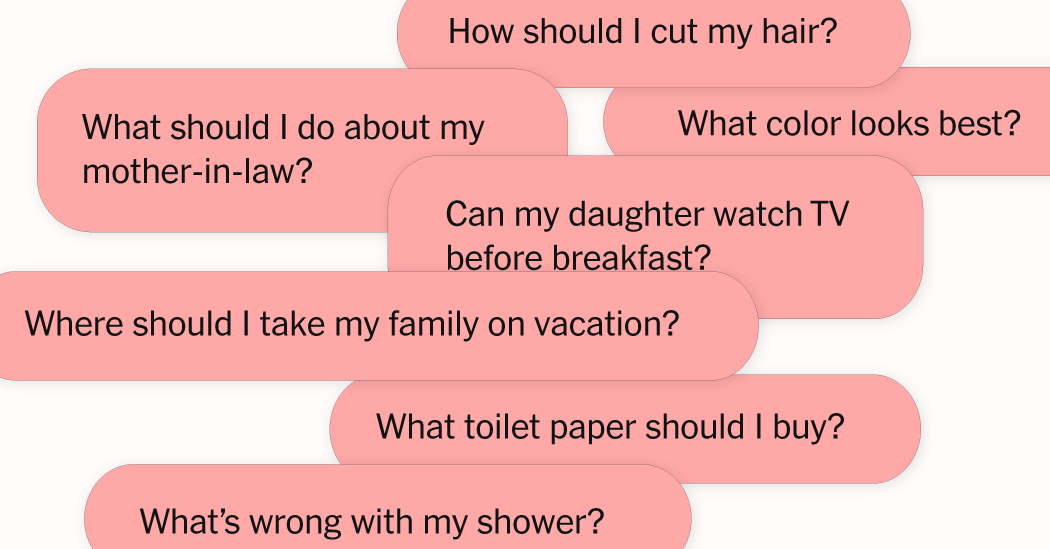

He designed the road map with two Republican senators, Mike Rounds of South Dakota and Todd Young of Indiana, and a fellow Democrat, Senator Martin Heinrich of New Mexico, after their yearlong listening tour to hear concerns about new generative A.I. technologies. Those tools, like OpenAI’s ChatGPT, can generate realistic and convincing images, videos, audio and text. Tech leaders have warned about the potential harms of A.I., including the obliteration of entire job categories, election interference, discrimination in housing and finance, and even the replacement of humankind.

The senators’ decision to delay A.I. regulation widens a gap between the United States and the European Union, which this year adopted a law that prohibits A.I.’s riskiest uses, including some facial recognition applications and tools that can manipulate behavior or discriminate. The European law requires transparency around how systems operate and what data they collect. Dozens of U.S. states have also proposed privacy and A.I. laws that would prohibit certain uses of the technology.

Outside of recent legislation mandating the sale or ban of the social media app TikTok, Congress hasn’t passed major tech legislation in years, despite multiple proposals.

“It’s disappointing because at this point we’ve missed several windows of opportunity to act while the rest of the world has,” said Amba Kak, a co-executive director of the nonprofit AI Now Institute and a former adviser on A.I. to the Federal Trade Commission.

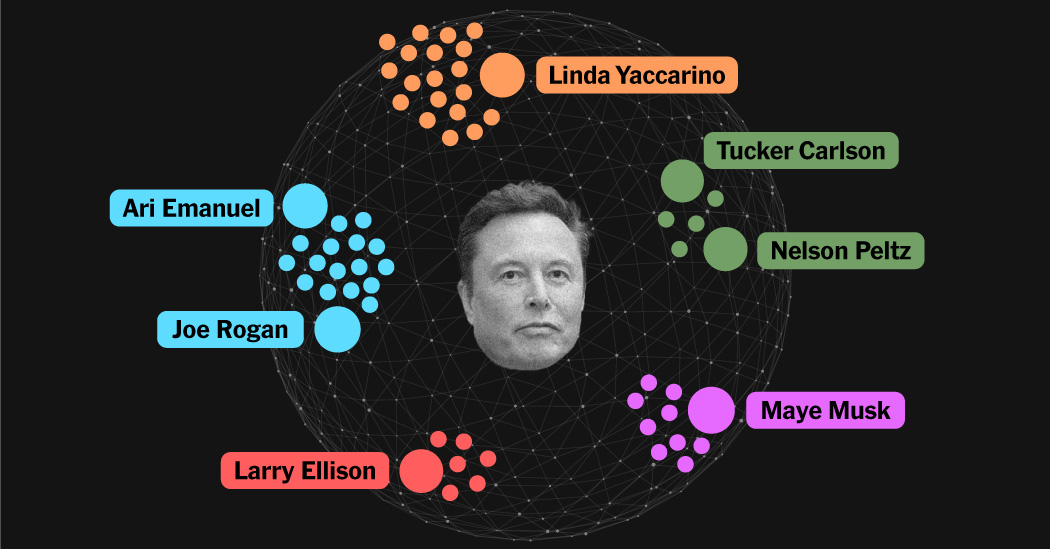

Mr. Schumer’s efforts on A.I. legislation began in June with a series of high-profile forums that brought together tech leaders including Elon Musk of Tesla, Sundar Pichai of Google and Sam Altman of OpenAI.

(The New York Times has sued OpenAI and its partner, Microsoft, over use of the publication’s copyrighted works in A.I. development.)

Mr. Schumer said in the interview that through the forums, lawmakers had begun to understand the complexity of A.I. technologies and how expert agencies and congressional committees were best equipped to create regulations.

The legislative road map encourages greater federal investment in the growth of domestic research and development.

“This is sort of the American way — we are more entrepreneurial,” Mr. Schumer said in the interview, adding that the lawmakers hoped to make “innovation the North Star.”

In a separate briefing with reporters, he said the Senate was more likely to consider A.I. proposals piecemeal instead of in one large legislative package.

“What we’d expect is that we would have some bills that certainly pass the Senate and hopefully pass the House by the end of the year,” Mr. Schumer said. “It won’t cover the whole waterfront. There’s too much waterfront to cover, and things are changing so rapidly.”

He added that his staff had spoken with Speaker Mike Johnson’s office

Maya Wiley, president of the Leadership Conference on Civil and Human Rights, participated in the first forum. She said that the closed-door meetings were “tech industry heavy” and that the report’s focus on promoting innovation overshadowed the real-world harms that could result from A.I. systems, noting that health and financial tools had already shown signs of discrimination against certain ethnic and racial groups.

Ms. Wiley has called for greater focus on the vetting of new products to make sure they are safe and operate without biases that can target certain communities.

“We should not assume that we don’t need additional rights,” she said.