User

A.I.

Generated by A.I.

User

A.I.

Generated by A.I.

User

A.I.

Generated by A.I.

User

A.I.

Generated by A.I.

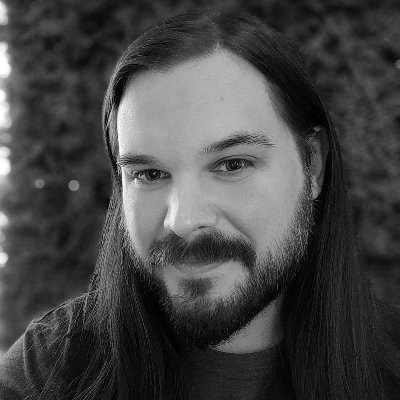

When Reid Southen, a movie concept artist based in Michigan, tried an A.I. image generator for the first time, he was intrigued by its power to transform simple text prompts into images.

But after he learned how A.I. systems were trained on other people’s artwork, his curiosity gave way to more unsettling thoughts: Were the tools exploiting artists and violating copyright in the process?

Inspired by tests he saw circulating online, he asked Midjourney, an A.I. image generator, to create an image of Joaquin Phoenix from “The Joker.” In seconds, the system made an image nearly identical to a frame from the 2019 film.

Reid Southen

Create an image of Joaquin Phoenix Joker movie, 2019, screenshot from a movie, movie scene

Midjourney’s response

Generated by A.I.

Copyrighted image from Warner Bros.

Note: Mr. Southen’s full prompt was: “Joaquin Phoenix Joker movie, 2019, screenshot from a movie, movie scene –ar 16:9 –v 6.0.” The prompt specifies Midjourney’s version number (6.0) and an aspect ratio (16:9).

Gary Marcus, a professor emeritus at New York University and A.I. expert who runs the newsletter “Marcus on A.I.,” collaborated with Mr. Southen to run even more prompts. Mr. Marcus suggested removing specific copyrighted references. “Videogame hedgehog” returned Sonic, Sega’s wisecracking protagonist. “Animated toys” created a tableau featuring Woody, Buzz and other characters from Pixar’s “Toy Story.” When Mr. Southen and Mr. Marcus tried “popular movie screencap,” out popped Iron Man, the Marvel character, in a familiar pose.

“What they’re doing is clear evidence of exploitation and using I.P. that they don’t have licenses to,” said Mr. Southen, referring to A.I. companies’ use of intellectual property.

Mr. Southen

popular movie screencap

Midjourney’s response

Generated by A.I.

Copyrighted image from Marvel

Note: Mr. Southen’s full prompt was: “popular movie screencap –ar 1:1 –v 6.0.” The prompt specifies Midjourney’s version number (6.0) and an aspect ratio (1:1).

The tests — which were replicated by other artists, reporters at The New York Times, and published in Mr. Marcus’s newsletter — raise questions about the training data used to create every A.I. system and whether the companies are violating copyright laws.

Several lawsuits, from actors like Sarah Silverman and authors like John Grisham, have put that question before the courts. (The Times has sued OpenAI, the company behind ChatGPT, and Microsoft, a major backer of the company, for infringing its copyright on news content.)

A.I. companies have responded that using copyrighted material is protected under “fair use,” a part of copyright law that allows material to be used in certain cases. They also said that reproducing copyrighted material too closely is a bug, often called “memorization,” that they are trying to fix. Memorization can happen when the training data is overwhelmed with many similar or identical images, A.I. experts said. But the problem is found also with material that only rarely appears in the training data, like emails.

For example, when Mr. Southen and Mr. Marcus asked Midjourney for a “Dune movie screencap” from the “Dune movie trailer,” there may be limited options for the model to draw from. The result was a frame nearly indistinguishable from one in the movie’s trailer.

Mr. Southen

Create an image of Dune movie screencap, 2021, Dune movie trailer

Midjourney’s response

Generated by A.I.

Copyrighted image from Warner Bros.

Note: Mr. Southen’s full prompt was: “dune movie screencap, 2021, dune movie trailer –ar 16:9 –v 6.0.” The prompt specifies Midjourney’s version number (6.0) and an aspect ratio (16:9).

A spokeswoman for OpenAI pointed to a blog post in which the company argued that training on publicly accessible data was “fair use” and that it provided several ways for creators and artists to opt out of its training process.

Midjourney did not respond to requests for comment. The company edited its terms of service in December, adding that users cannot use the service to “violate the intellectual property rights of others, including copyright.” Microsoft declined to comment.

Warner Bros., which owns copyrights to several films tested by Mr. Southen and Mr. Marcus, declined to comment.

“Nobody knows how this is going to come out, and anyone who tells you ‘It’s definitely fair use’ is wrong,” said Keith Kupferschmid, the president and chief executive of the Copyright Alliance, an industry group that represents copyright holders. “This is a new frontier.”

A.I. companies could violate copyright in two ways, Mr. Kupferschmid said: They could train on copyrighted material that they have not licensed, or they could reproduce copyrighted material when users enter a prompt.

The experiments by Mr. Southen, Mr. Marcus and others exposed instances of both. The pair published their findings in the magazine by the Institute of Electrical and Electronics Engineers earlier this month.

Mr. Southen

Create an image of “The Last of Us 2,” Ellie with guitar in front of tree

Midjourney’s response

Generated by A.I.

Copyrighted image from Naughty Dog, the video game developer

Note: Mr. Southen’s full prompt was: “the last of us 2 ellie with guitar in front of tree –v 6.0 –ar 16:9.” The prompt specifies Midjourney’s version number (6.0) and an aspect ratio (16:9).

A.I. companies said they had established guardrails that could prevent their A.I. systems from producing material that violates copyright. But Mr. Marcus said that despite those strategies, copyrighted material still slips through.

When Times journalists asked ChatGPT to create an image of SpongeBob SquarePants, the children’s animated television character, it produced an image remarkably similar to the cartoon. The chatbot said the image only resembled the copyrighted work. The differences were subtle — the character’s tie was yellow instead of red, and it had eyebrows instead of eyelashes.

N.Y.T.

Create an image of SpongeBob SquarePants

ChatGPT’s response

Generated by A.I.

Here is the image of the character you described, resembling SpongeBob SquarePants.

When Times journalists omitted SpongeBob’s name from another request, OpenAI created a character that was even closer to the copyrighted work.

N.Y.T.

Create an image of an animated sponge wearing pants.

ChatGPT’s response

Generated by A.I.

Here is the image of the animated sponge wearing pants.

Copyrighted image from Viacom

Prof. Kathryn Conrad, who teaches English at the University of Kansas started her own tests because she was concerned that A.I. systems could replace and devalue artists by training off their intellectual property.

In her experiments, ultimately published with Mr. Marcus, she asked Microsoft Bing for an “Italian video game character” without mentioning Mario, the famed character owned by Nintendo. The image generator from Microsoft created artwork that closely resembled the copyrighted work. Microsoft’s tool uses a version of DALL-E, the image generator created by OpenAI.

Professor Conrad

Could you create an original image of an Italian video game character?

Microsoft Bing’s response

Images

Generated by A.I.

Since that experiment was published in December, the image generator has produced different results. An identical prompt, input in January by Times reporters, resulted in images that strayed more significantly from the copyrighted material, suggesting to Professor Conrad that the company may be tightening its guardrails.

N.Y.T.

Could you create an original image of an Italian video game character?

Microsoft Bing’s response

Images

Generated by A.I.

“This is a Band-Aid on a bleeding wound,” Professor Conrad said of the safeguards implemented by OpenAI and others. “This isn’t going to be fixed easily just with a guardrail.”